RAG Evaluation Quickstart

Learn to evaluate retrieval-augmented-generation (RAG) pipelines and systems using deepeval, such as RAG QA, summarizaters, and customer support chatbots.

Overview

RAG evaluation involves evaluating the retriever and generator as separately components. This is because in a RAG pipeline, the final output is only as good as the context you've fed into your LLM.

In this 5 min quickstart, you'll learn how to:

- Evaluate your RAG pipeline end-to-end

- Test the retriever and generator as separate components

- Evaluate multi-turn RAG

Prerequisites

- Install

deepeval - A Confident AI API key (recommended). Sign up for one here.

Confident AI allows you to view and share your testing reports. Set your API key in the CLI:

CONFIDENT_API_KEY="confident_us..."

Run Your First RAG Eval

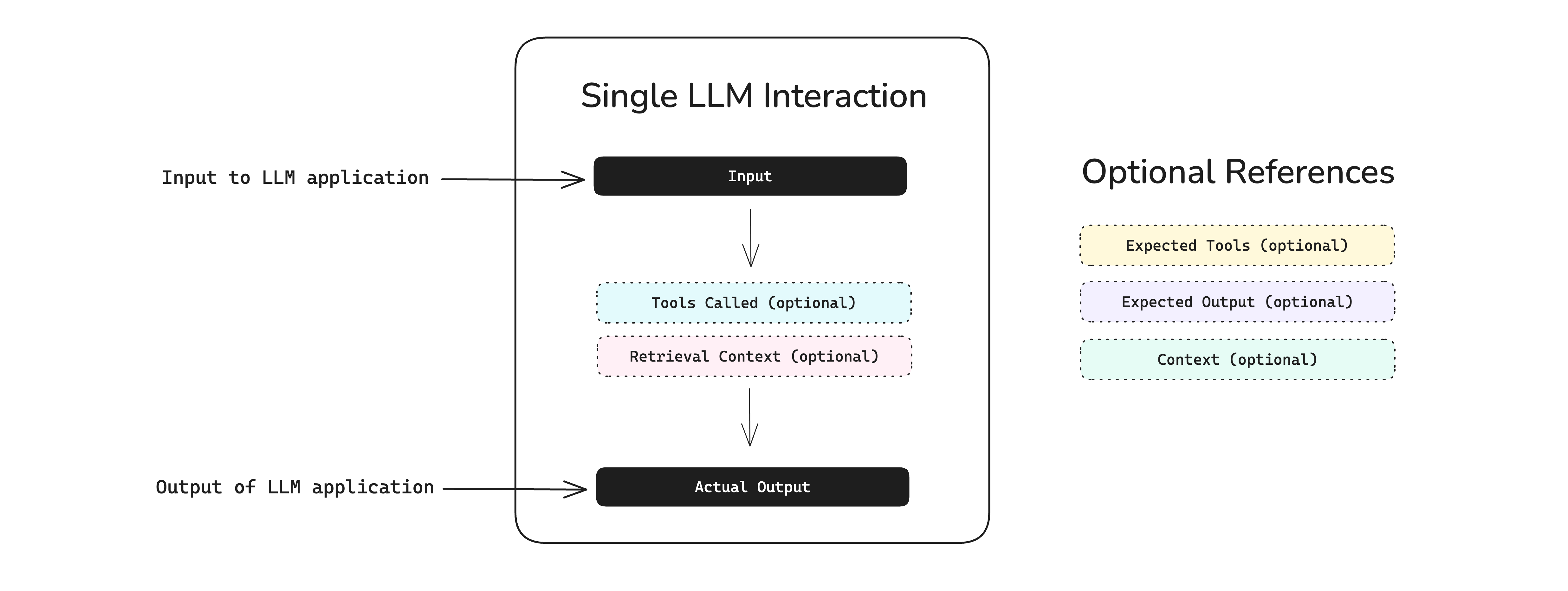

End-to-end RAG evaluation treats your entire LLM app as a standalone RAG pipeline. In deepeval, a single-turn interaction with your RAG pipeline is modelled as an LLM test case:

The retrieval_context in the diagram above is cruical, as it represents the text chunks that were retrieved at evaluation time.

deepeval provides a wide selection of LLM models that you can easily choose from and run evaluations with.

- OpenAI

- Anthropic

- Gemini

- Ollama

- Grok

- Azure OpenAI

- Amazon Bedrock

- Vertex AI

from deepeval.metrics import AnswerRelevancyMetric

task_completion_metric = AnswerRelevancyMetric(model="gpt-4.1")

from deepeval.metrics import AnswerRelevancyMetric

from deepeval.models import AnthropicModel

model = AnthropicModel("claude-3-7-sonnet-latest")

task_completion_metric = AnswerRelevancyMetric(model=model)

from deepeval.metrics import AnswerRelevancyMetric

from deepeval.models import GeminiModel

model = GeminiModel("gemini-2.5-flash")

task_completion_metric = AnswerRelevancyMetric(model=model)

from deepeval.metrics import AnswerRelevancyMetric

from deepeval.models import OllamaModel

model = OllamaModel("deepseek-r1")

task_completion_metric = AnswerRelevancyMetric(model=model)

from deepeval.metrics import AnswerRelevancyMetric

from deepeval.models import GrokModel

model = GrokModel("grok-4.1")

task_completion_metric = AnswerRelevancyMetric(model=model)

from deepeval.metrics import AnswerRelevancyMetric

from deepeval.models import AzureOpenAIModel

model = AzureOpenAIModel(

model="gpt-4.1",

deployment_name="Test Deployment",

api_key="Your Azure OpenAI API Key",

api_version="2025-01-01-preview",

base_url="https://example-resource.azure.openai.com/",

temperature=0

)

task_completion_metric = AnswerRelevancyMetric(model=model)

from deepeval.metrics import AnswerRelevancyMetric

from deepeval.models import AmazonBedrockModel

model = AmazonBedrockModel(

model="anthropic.claude-3-opus-20240229-v1:0",

region="us-east-1",

generation_kwargs={"temperature": 0},

)

task_completion_metric = AnswerRelevancyMetric(model=model)

from deepeval.metrics import AnswerRelevancyMetric

from deepeval.models import GeminiModel

model = GeminiModel(

model="gemini-1.5-pro",

project="Your Project ID",

location="us-central1",

temperature=0

)

task_completion_metric = AnswerRelevancyMetric(model=model)

Setup RAG pipeline

Modify your RAG pipeline to return the retrieved contexts alongside the LLM response.

- Python

- LangGraph

- LangChain

- LlamaIndex

def rag_pipeline(input):

...

return 'RAG output', ['retrieved context 1', 'retrieved context 2', ...]

from langchain_core.messages import HumanMessage

from langchain.vectorstores import FAISS

from langchain_openai import OpenAIEmbeddings, ChatOpenAI

embeddings = OpenAIEmbeddings()

vectorstore = FAISS.load_local("./faiss_index", embeddings)

retriever = vectorstore.as_retriever()

llm = ChatOpenAI(model="gpt-4")

def rag_pipeline(input):

# Extract retrieval context

retrieved_docs = retriever.get_relevant_documents(input)

context_texts = [doc.page_content for doc in retrieved_docs]

# Generate response

state = {"messages": [HumanMessage(content=input + "\\n\\n".join(context_texts))]}

result = llm.invoke(state)

return result["messages"][-1].content, context_texts

from langchain_openai import ChatOpenAI

from langchain.vectorstores import Chroma

from langchain.chains import RetrievalQA

llm = ChatOpenAI(model="gpt-4")

vectorstore = Chroma(persist_directory="./chroma_db")

retriever = vectorstore.as_retriever(search_kwargs={"k": 3})

def rag_pipeline(input):

# Extract retrieval context

retrieved_docs = retriever.get_relevant_documents(input)

context_texts = [doc.page_content for doc in retrieved_docs]

# Generate response

qa_chain = RetrievalQA.from_chain_type(

llm=llm,

chain_type="stuff",

retriever=retriever,

return_source_documents=True

)

result = qa_chain.invoke({"query": input})

return result["result"], context_texts

from llama_index.core import VectorStoreIndex, SimpleDirectoryReader

documents = SimpleDirectoryReader("./data").load_data()

index = VectorStoreIndex.from_documents(documents)

query_engine = index.as_query_engine()

def rag_pipeline(input):

# Generate response

response = query_engine.query(input)

# Extract retrieval context

context_texts = []

if hasattr(response, 'source_nodes'):

context_texts = [node.text for node in response.source_nodes]

return str(response), context_texts

Instead of changing your code to return these data, we'll show a better way to run RAG evals in the next section.

Create a test case

Create a test case using retrieval context and LLM output from your RAG pipeline. Optionally provide an expected output if you plan to use contextual precision and contextual recall metrics.

from deepeval.test_case import LLMTestCase

input = 'How do I purchase tickets to a Coldplay concert?'

actual_output, retrieved_contexts = rag_pipeline(input)

test_case = LLMTestCase(

input=input,

actual_output=actual_output,

retrieval_context=retrieved_contexts,

expected_output='optional expected output'

)

Define metrics

Define RAG metrics to evaluate your RAG pipeline, or define your own using G-Eval.

from deepeval.metrics import AnswerRelevancyMetric, ContextualPrecisionMetric

answer_relevancy = AnswerRelevancyMetric(threshold=0.8)

contextual_precision = ContextualPrecisionMetric(threshold=0.8)

What RAG metrics are available?

DeepEval offers a total of 5 RAG metrics, which are:

Each metric measures a different parameter in your RAG pipeline's quality, and each can help you determine the best prompts, models, or retriever settings for your use-case.

Run an evaluation

Run an evaluation on the LLM test case you previously created using the metrics defined above.

from deepeval import evaluate

...

evaluate([test_case], metrics=[answer_relevancy, contextual_precision])

🎉🥳 Congratulations! You've just ran your first RAG evaluation. Here's what happened:

- When you call

evaluate(),deepevalruns all yourmetricsagainst alltest_cases - All

metricsoutputs a score between0-1, with athresholddefaulted to0.5 - Metrics like

contextual_precisionevaluates based on theretrieval_context, whereasanswer_relevancychecks theactual_outputof your test case - A test case passes only if all metrics passess

This creates a test run, which is a "snapshot"/benchmark of your RAG pipeline at any point in time.

Viewing on Confident AI (recommended)

If you've set your CONFIDENT_API_KEY, test runs will appear automatically on Confident AI, the DeepEval platform.

If you haven't logged in, you can still upload the test run to Confident AI from local cache:

deepeval view

Evaluate Retriever

deepeval allows you to evaluate RAG components individually. This also means you don't have to return retrieval_contexts in awkward places just to feed data into the evaluate() function.

Trace your retriever

Attach the @observe decorator to functions/methods that make up your retriever. These will represent individual components in your RAG pipeline.

from deepeval.tracing import observe

@observe()

def retriever(input):

# Your retriever implemetation goes here

pass

Set the CONFIDENT_TRACE_FLUSH=1 in your CLI to prevent traces from being lost in case of an early program termination.

export CONFIDENT_TRACE_FLUSH=1

Define metrics & test cases

Create a retriever focused metric. You'll then need to:

- Add it to your component

- Create an

LLMTestCasein that component withretrieval_context

from deepeval.tracing import observe, update_current_span

from deepeval.metrics import ContextualRelevancyMetric

contextual_relevancy = ContextualRelevancyMetric(threshold=0.6)

@observe(metrics=[contextual_relevancy])

def retriever(query):

# Your retriever implemetation goes here

update_current_span(

test_case=LLMTestCase(input=query, retrieval_context=["..."])

)

pass

Run an evaluation

Finally, use the dataset iterator to invoke your RAG system on a list of goldens.

from deepeval.dataset import EvaluationDataset, Golden

...

# Create dataset

dataset = EvaluationDataset(goldens=[Golden(input='This is a test query')])

# Loop through dataset

for golden in dataset.evals_iterator():

retriever(golden.input)

✅ Done. With this setup, a simple for loop is all that's required.

You can also evaluate your retriever if it is nested within a RAG pipeline:

from deepeval.dataset import EvaluationDataset, Golden

...

def rag_pipeline(query):

@observe(metrics=[contextual_relevancy])

def retriever(query):

pass

# Create dataset

dataset = EvaluationDataset(goldens=[Golden(input='This is a test query')])

# Loop through dataset

for golden in dataset.evals_iterator():

rag_pipeline(golden.input)

Evaluate Generator

The same applies to evaluating the generator of your RAG pipeline, only this time you would trace your generator with metrics focused on your generator instead.

Trace your generator

Attach the @observe decorator to functions/methods that make up your generator:

from deepeval.tracing import observe

@observe()

def generator(query):

# Your retriever implemetation goes here

pass

Define metrics & test cases

Create a generator focused metric. You'll then need to:

- Add it to your component

- Create an

LLMTestCasewith the required parameters

For example, the FaithfulnessMetric requires retrieval_context, while AnswerRelevancyMetric doesn't.

from deepeval.tracing import observe, update_current_span

from deepeval.metrics import AnswerRelevancyMetric

answer_relevancy = AnswerRelevancyMetric(threshold=0.6)

@observe(metrics=[answer_relevancy])

def generator(query, text_chunks):

# Your retriever implemetation goes here

update_current_span(test_case=LLMTestCase(input=query, actual_output="..."))

pass

Run an evaluation

Finally, use the dataset iterator to invoke your RAG system on a list of goldens.

from deepeval.dataset import EvaluationDataset, Golden

...

# Create dataset

dataset = EvaluationDataset(goldens=[Golden(input='This is a test query')])

# Loop through dataset

for golden in dataset.evals_iterator():

generator(golden.input)

✅ Done. You just learnt how to evaluate the generator as a standalone.

You can also combine retriever and generator evals:

from deepeval.dataset import EvaluationDataset, Golden

...

def rag_pipeline(query):

@observe(metrics=[contextual_relevancy])

def retriever(query) -> list[str]:

update_current_span(test_case=LLMTestCase(input=query, retrieval_context=["..."]))

@observe(metrics=[answer_relevancy])

def generator(query, text_chunks):

update_current_span(test_case=LLMTestCase(input=query, actual_output="..."))

text_chunks = retriever(query)

return generator(query, text_chunks)

# Create dataset

dataset = EvaluationDataset(goldens=[Golden(input='This is a test query')])

# Loop through dataset

for golden in dataset.evals_iterator():

rag_pipeline(golden.input)

Multi-Turn RAG Evals

deepeval also lets you evaluate RAG in multi-turn systems. This is especially useful for chatbots that rely on RAG to generate responses, such as customer support chatbots.

You should first read this section on multi-turn evals if you haven't already.

Create a test case

Create a ConversationalTestCase by passing in a list of Turns from an existing conversation, similar to OpenAI's message format.

from deepeval.test_case import ConversationalTestCase, Turn

test_case = ConversationalTestCase(

turns=[

Turn(role="user", content="I'd like to buy a ticket to a Coldplay concert."),

Turn(

role="assistant",

content="Great! I can help you with that. Which city would you like to attend?",

retrieval_context=["Concert cities: New York, Los Angeles, Chicago"]

),

Turn(role="user", content="New York, please."),

Turn(

role="assistant",

content="Perfect! I found VIP and standard tickets for the Coldplay concert in New York. Which one would you like?",

retrieval_context=["VIP ticket details", "Standard ticket details"]

)

]

)

Since your chatbot uses RAG, each turn from the assistant should also include the retrieval_context parameter.

Create metrics

Define a multi-turn RAG metric to evaluate your chatbot system:

from deepeval.metrics import TurnRelevancy, TurnFaithfulness

from deepeval.test_case import TurnParams

turn_faithfulness = TurnFaithfulness()

turn_relevancy = TurnRelevancy()

Run an evaluation

Run an evaluation on the test case using the evaluate function and the conversational RAG metric you've defined.

from deepeval import evaluate

...

evaluate([test_case], metrics=[turn_faithfulness, turn_relevancy])

Finally, run main.py:

python main.py

✅ Done. There are lots of details we left out from this multi-turn section, such as how to simulate user interactions instead, which you can find more here.

Next Steps

Now that you have run your first RAG evals, you should:

- Customize your metrics: Include all 5 RAG metrics based on your use case.

- Prepare a dataset: If you don't have one, generate one as a starting point.

- Enable evals in production: Just replace

metricsin@observewith ametric_collectionstring on Confident AI.

You'll be able to analyze performance over time on threads this way, and add them back to your evals dataset for further evaluation.