LLM Tracing

Tracing your LLM application helps you monitor its full execution from start to finish. With deepeval's @observe decorator, you can trace and evaluate any LLM interaction at any point in your app no matter how complex they may be.

Quick Summary

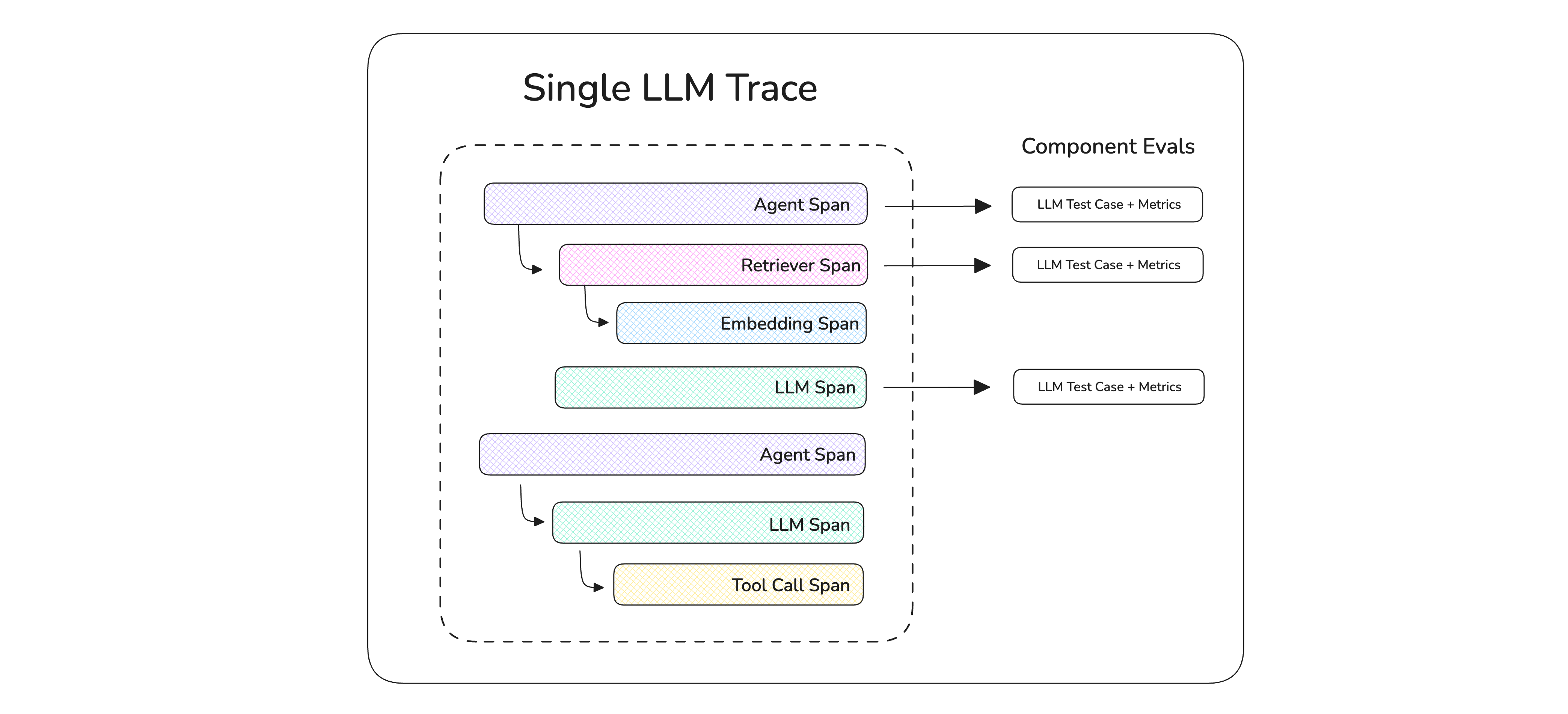

An LLM trace is made up of multiple individual spans. A span is a flexible, user-defined scope for evaluation or debugging. A full trace of your application contains one or more spans.

Tracing allows you to run both end-to-end and component-level evals which you'll learn about in this guide.

Learn how deepeval's tracing is non-intrusive

deepeval's tracing is non-intrusive, it requires minimal code changes and doesn't add latency to your LLM application. It also:

-

Uses concepts you already know: Tracing a component in your LLM app takes on average 3 lines of code, which uses the same

LLMTestCases and metrics that you're already familiar with. -

Does not affect production code: If you're worried that tracing will affect your LLM calls in production, it won't. This is because the

@observedecorators that you add for tracing is only invoked if called explicitly during evaluation. -

Non-opinionated:

deepevaldoes not care what you consider a "component" - in fact a component can be anything, at any scope, as long as you're able to set yourLLMTestCasewithin that scope for evaluation.

Tracing only runs when you want it to run, and takes 3 lines of code:

from deepeval.test_case import LLMTestCase

from deepeval.metrics import AnswerRelevancyMetric

from deepeval.tracing import observe, update_current_span

from openai import OpenAI

client = OpenAI()

@observe(metrics=[AnswerRelevancyMetric()])

def get_res(query: str):

response = client.chat.completions.create(

model="gpt-4o",

messages=[{"role": "user", "content": query}]

).choices[0].message.content

update_current_span(input=query, output=response)

return response

Why Tracing?

Tracing your LLM applications allows you to:

-

Generate test cases dynamically: Many components rely on upstream outputs. Tracing lets you define

LLMTestCases at runtime as data flows through the system. -

Debug with precision: See exactly where and why things fail—whether it’s tool calls, intermediate outputs, or context retrieval steps.

-

Run targeted metrics on specific components: Attach

LLMTestCases to agents, tools, retrievers, or LLMs and apply metrics like answer relevancy or context precision—without needing to restructure your app. -

Run end-to-end evals with trace data: Use the

evals_iteratorwithmetricsto perform comprehensive evaluations using your traces.

Setup Your First Trace

To set up tracing in your LLM app, you need to understand two key concepts:

- Trace: The full execution of your app, made up of one or more spans.

- Span: A specific component or unit of work—like an LLM call, tool invocation, or document retrieval.

The @observe decorator is the primary way to set up tracing for your LLM application.

Decorate your components

An individual function that makes up a part of your LLM application or is invoked only when necessary, can be classified as a component. You can decorate this component with deepeval's @observe decorator.

from openai import OpenAI

from deepeval.tracing import observe

client = OpenAI()

@observe()

def get_res(query: str):

response = client.chat.completions.create(

model="gpt-4o",

messages=[{"role": "user", "content": query}]

).choices[0].message.content

return response

The above get_res() component is treated as an individual span within a trace.

Add test cases inside components

You can assign individual test cases to a span by using the update_current_span function from deepeval. This allows you to create separate LLMTestCases on a component level.

from openai import OpenAI

from deepeval.tracing import observe, update_current_span

from deepeval.test_case import LLMTestCase

client = OpenAI()

@observe()

def get_res(query: str):

response = client.chat.completions.create(

model="gpt-4o",

messages=[{"role": "user", "content": query}]

).choices[0].message.content

update_current_span(input=query, output=response)

return response

You can either supply the LLMTestCase or its parameters in the update_current_span to create a component-level test case. Learn more here.

Get your traces

You can now get your traces by simply calling your observed function or application.

query = "This will get you a trace."

get_res(query)

🎉🥳 Congratulations! You just created your first trace with deepeval.

We highly recommend setting up Confident AI to look at your traces in an intuitive UI like this:

It's free to get started. Just the following command:

deepeval login

Observe

The @observe decorator is a non-intrusive Python decorator that you can use on top of any component as you wish. It tracks the usage of the component whenever it is invoked to create a span.

A span can contain many child spans, forming a tree structure—just like how different components of your LLM application interact

from deepeval.tracing import observe

@observe()

def generate(query: str) -> str:

context = retrieve(query)

# Your implementation

return f"Output for given {query} and {context}."

@observe()

def retrieve(query: str) -> str:

# Your implementation

return [f"Context for the given {query}"]

From the above example, an observed component generate calling another observed component retrieve create a nested span generate with retrieve inside it.

There are FOUR optional parameters when using the @observe decorator:

- [Optional]

metrics: A list of metrics of typeBaseMetricthat will be used to evaluate your span. - [Optional]

name: The function name or a string specifying how this span is displayed on Confident AI. - [Optional]

type: A string specifying the type of span. The value can be any one ofllm,retriever,tool, andagent. Any other value is treated as a custom span type. - [Optional]

metric_collection: The name of the metric collection you stored on Confident AI.

Click here to learn more about span types

For simplicity, we always recommend custom spans unless needed otherwise, since metrics only care about the scope of the span, and supplying a specified type is most useful only when using Confident AI. To summarize:

- Specifying a span type (like

"llm") allows you to supply additional parameters in the@observesignature (e.g., themodelused). - This information becomes extremely useful for analysis and visualization if you're using

deepevaltogether with Confident AI (highly recommended). - Otherwise, for local evaluation purposes, span

typemakes no difference — evaluation still works the same way.

To learn more about the different spans types, or to run LLM evaluations with tracing with a UI for visualization and debugging, visiting the official Confident AI docs on LLM tracing.

deepeval uses Python context variables during evaluation so your code can access the active golden for each test case. You can retrieve it with get_current_golden() and pass its expected_output when you update a span or trace.

Update Current Span

The update_current_span method can be used to create a test case for the corresponding span. This is especially useful for doing component-level evals or debugging your application.

from deepeval.tracing import observe, update_current_span

from deepeval.test_case import LLMTestCase

@observe()

def generate(query: str) -> str:

context = retrieve(query)

# Your implementation

res = f"Output for given {query} and {context}."

update_current_span(test_case=LLMTestCase(

input=query,

actual_output=res,

retrieval_context=context

))

return res

@observe()

def retrieve(query: str) -> str:

# Your implementation

context = [f"Context for the given {query}"]

update_current_span(input=query, retrieval_context=context)

return context

There are TWO ways to create test cases when using the update_current_span function:

-

[Optional]

test_case: Takes anLLMTestCaseto create a span level test case for that component. -

Or, You can also opt to give the values of

LLMTestCasedirectly by using the following attributes:- [Optional]

input - [Optional]

output - [Optional]

retrieval_context - [Optional]

context - [Optional]

expected_output - [Optional]

tools_called - [Optional]

expected_tools

- [Optional]

You can use the individual LLMTestCase params in the update_current_span function to override the values of the test_case you passed.

Update Current Trace

You can update your end-to-end test cases for trace by using the update_current_trace function provided by deepeval

from openai import OpenAI

from deepeval.tracing import observe, update_current_trace

@observe()

def llm_app(query: str) -> str:

@observe()

def retriever(query: str) -> list[str]:

chunks = ["List", "of", "text", "chunks"]

update_current_trace(retrieval_context=chunks)

return chunks

@observe()

def generator(query: str, text_chunks: list[str]) -> str:

res = OpenAI().chat.completions.create(model="gpt-4o", messages=[{"role": "user", "content": query}]

).choices[0].message.content

update_current_trace(input=query, output=res)

return res

return generator(query, retriever(query))

There are TWO ways to create test cases when using the update_current_trace function:

-

[Optional]

test_case: Takes anLLMTestCaseto create a span level test case for that component. -

Or, You can also opt to give the values of

LLMTestCasedirectly by using the following attributes:- [Optional]

input - [Optional]

output - [Optional]

retrieval_context - [Optional]

context - [Optional]

expected_output - [Optional]

tools_called - [Optional]

expected_tools

- [Optional]

You can use the individual LLMTestCase params in the update_current_trace function to override the values of the test_case you passed.

Using goldens

In deepeval, a golden is the reference test case used by your metrics, for example, to compare actual and expected outputs. During evaluation, you can read the active golden and pass its expected_output to spans or traces.

from deepeval.dataset import get_current_golden

from deepeval.tracing import observe, update_current_span, update_current_trace

from deepeval.test_case import LLMTestCase

@observe()

def tool(input: str):

# produce your model or tool output

result = ... # <- your code here

golden = get_current_golden() # active golden for this test

expected = golden.expected_output if golden else None

# Option A: pass via LLMTestCase to the span

update_current_span(

test_case=LLMTestCase(

input=input,

actual_output=result,

expected_output=expected,

)

)

# Option B: set it on the trace

update_current_trace(

test_case=LLMTestCase(

input=input,

actual_output=result,

expected_output=expected,

)

)

return result

Notes

expected_outputmay be provided viaLLMTestCaseorexpected_output=.- If you don’t want to use the dataset’s

expected_output, pass your own string.

Environment Variables

If you run your @observe decorated LLM application outside of evaluate() or assert_test(), you'll notice some logs appearing in your console. To disable them completely, just set the following environment variables:

CONFIDENT_TRACE_VERBOSE=0

CONFIDENT_TRACE_FLUSH=0

Next Steps

Now that you have your traces, you can run either end-to-end or component-level evals.